Containerization and Docker – An App Developer’s Arcadia

So with my journey to better understand Development Operations (DevOps for the cool kids), I’m looking now into a relatively new tool which seems to be received with extreme positivity. That is DOCKER.

What problem is Docker solving?

But it’s working on my machine!

Developers everywhere before Virtualization and Containerization

A common challenge for a developer would be the work on an app on their machine which would successfully execute, and would either stop working all together, or not perform as expected on another machine.

This was due to differences in how the developer’s environment is configured compared to another machine, which included dependencies that allowed an app to run as expected. E.g. different versions of a running application. This was challenge known as portability.

Before we continue, let’s define some key terms:

- Hardware – the physical components the make up a computer e.g Hard drive, power supply, RAM etc.

- Software – a collection of data and instructions on a computer that performs specific tasks. e.g. Microsoft Word, Chrome Web Browser, Starcraft II (computer game)

- Operating system (OS) – software that controls a computer’s hardware e.g. Windows 10, Android, MacOs, Linux, iOS

- Virtual machines (VM) – An operating system (guest OS) that runs within an existing operating system (host OS)

- Hypervisor – the software that creates and runs virtual machines

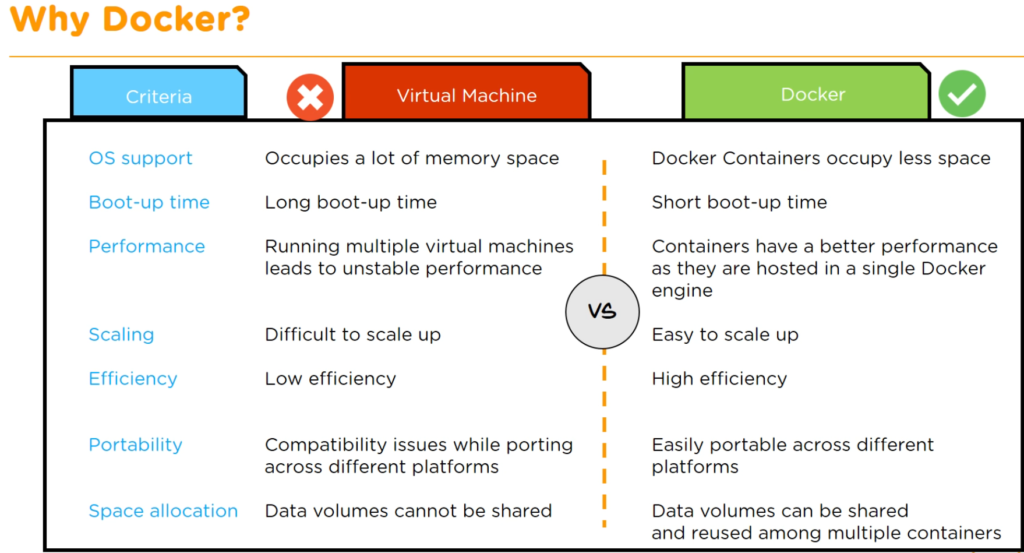

The first innovation to solve for this problem of portability of an application was virtualisation. By running a virtual machine that contained the identical operating system with the exact environment setup as the developer’s machine, this streamlined the deployment of applications across different machines. However there is still risk of incompatibility on the OS level of a VM.

What is Docker?

Docker is the next step in solving for this challenge, as it less resource intensive (memory space), easier to manage/maintain and more efficient in performance. This is due to concept of containerization, which remove the need for a guest OS and is therefore much more lightweight.

The 4 main Components of Docker:

- Client and Server

Accessed from a command line terminal window, this allows you to run a Daemon and Registry Service. The Daemon facilitates and manages running of containers and maintaining the images that are created.

This component builds images that pass the commands from Client –> Server.

- Images

These are templates with instructions used for creating containers, that are stored in a hub or repository

- Containers

A container is software bucket comprising everything required to execute software independently of anything else. Multiple containers can exist on the same host OS, and they are all isolated from each other, eliminating dependencies.

- Registry

Is the open source server side capability used for hosting and distributing images, similar to a github.

The Docker Workflow

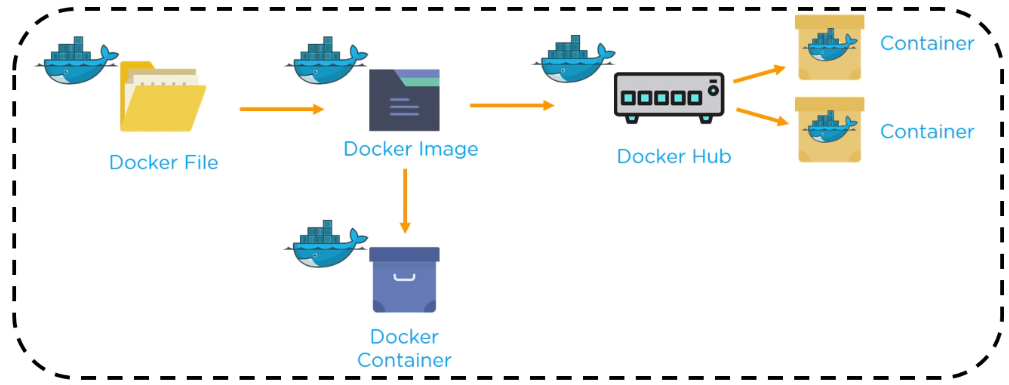

- The Docker File creates a Docker Image using a build command

- A Docker Image contains all the application’s code

- Using the Docker Image, a user can execute the code to create the Docker Containers

- Once a Docker Image is built, it can be pushed to a registry such as Docker Hub for distribution

- From the registry other uses can use the image to build new containers

Use Cases Example

Selling a website use to be difficult as the only way to do this previously was to sell the server in which the site is hosted on. This could be a logistical nightmare, until Docker came around.

It meant you could create containers for the each component of the website onto an image e.g. front end vs back end, and as long as the person you’re selling to also use Docker it was a matter of running the newly created image.

Conclusion

Docker is the latest evolution in DevOps which focuses on the deployment side of applications. This allows for a more consistent and stable experience of running an application regardless of where it has been executed. It unlocks scale and time saved in the administration of a project, leading to more available time and effort in creating an amazing product.